In an abundance of caution, AnswerLab is converting all in-person research to remote methodologies, temporarily closed o...

Inclusive Recruiting on UX Research Platforms

Posted by David Muñoz on Apr 28, 2021

Platforms designed to facilitate UX research processes can cut costs and time, but these benefits often come at diversity’s expense. Platform panels often lack diversity. Your product needs feedback from diverse and marginalized populations to ensure you meet their needs. To get it, you’ll need to take some extra steps.

We’ve developed a set of approaches to increase your ability to get diverse feedback while using these UX Research platforms.

Trade-off #1: Speed versus recruiting criteria control

Online research platforms specialize in finding the first available participants that meet your study’s eligibility criteria. It often only takes minutes to recruit six participants. With more traditional recruiting, individuals are screened over the phone, which might take days or weeks.

The downside of speedy platform recruits is the limited control over recruiting criteria and quotas. The nature of these platforms also introduces restraints, including:

- A limited number of questions (typically 5-10), depending on the platform and subscription. The more questions you include, the greater the chance that a potential participant will exit the screener before finishing.

This greater dropoff leads to the platform needing to potentially send a second email blast to a new subset of panel participants, incurring an additional cost that may impact your subscription. - Participants’ expectations of effort. Since unmoderated studies usually take less than 20 minutes, participants don’t expect to spend much time filling out a screener. They also expect a fairly low incentive given the limited amount of time they spend during a study.

- Limited control over participant selection. This process is either a slow, manual approval process or entirely out of your control - there is no in-between.

In comparison, traditional recruiting firms can accommodate upwards of 20 screening questions, a number that isn’t possible on UX Research platforms. They set expectations with potential participants accordingly and take responsibility for whether they meet all study criteria. Traditional recruiting firms also give you the final say on demographic quotas, which many platforms are unable to do. In this scenario, you have the most quota control. For example, you can request to recruit precisely one Black participant or request at least one but no more than three participants over 55 years old.

Trade-off #2: Speed versus recruiting panel diversity

UX Research platforms recruit participants almost exclusively online, with low incentives compared to traditional methods. They also do not provide affordances for participants with disabilities. These factors negatively affect the overall diversity of their participants, and as a result, platform panel members tend to skew:

-

- Under 35 years old

- White/Caucasian

- Residents of urban areas

- More tech-savvy

Since study sign-up is first-come, first-served, you could miss out on recruiting a participant from an underrepresented population (e.g., based on race/ethnicity or age) simply due to the timing of when you launch your study and who is available. You are also unlikely to reach any participants with impairments (e.g., visual, motor, cognitive, and/or auditory).

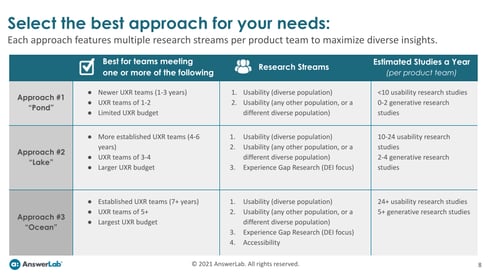

All teams face time and budget pressure

We advise teams to implement one of our three adaptable approaches for using research platforms to get fast and diverse feedback while being cost-sensitive.

UX Research teams vary in size, budget, and workflow. Below, we outline three approach levels that consider team size and resources: Pond, Lake, and Ocean. We recommend starting with the foundation-level approach, Pond. As your team grows in size and your team’s inclusivity maturity grows, your approach may evolve into the Lake and Ocean systems.

For a comparison table of these approaches and example timelines, download the extended version of this article.

Each of the three approaches we describe contains multiple, ongoing research streams that focus on different types of feedback. For all approaches, we recommend splitting a traditional n=6 usability test into two smaller tests, each with n=3-4:

- Test #1 would only recruit those in a diverse population based on your company’s inclusivity metrics (e.g., recruiting more participants who identify as LGBTQIA+).

- Test #2 could recruit on a first-come, first-served basis if you need feedback the same day. However, if your time and budget are more flexible, you could focus this 2nd test on a different diverse population (e.g., adults 55+ years old).

Before implementing one of these approaches, be sure to talk with your UX Research platform’s Customer Success Manager about any possible impact on your subscription’s cost. Confirm how many total users/tests per year you can get feedback from and how many concurrent or simultaneously live studies you can host on your account. While you're talking to your Customer Success Manager, be sure to share that you'd like to see features that help you have more diverse recruits.

For any of these approaches, make sure you educate stakeholders on how and why you are including more diverse participants in research. Help them learn how to digest findings without tokenizing participants. Emphasize that each participant’s lived experience may vary from someone with a similar background.

Approach #1 Research Pond

We recommend this approach when teams meet one or more of the following criteria: - Your UXR team is newly formed (1-3 years)

- You have 1-2 researchers

- Budget is limited

- Usability (diverse population)

- Usability (any other population, or a different diverse population)

- <10 usability research studies

- 0-2 generative research studies

Approach #2 Research Lake

This approach is best for teams meeting one more of the following criteria: - Your team is established (4-6 years)

- You have 3-4 researchers

- You have a larger budget

- Usability (diverse population)

- Usability (any other population, or a different diverse population)

- Experience Gap Research (DEI focus)

- 10-24 usability research studies

- 2-4 generative research studies

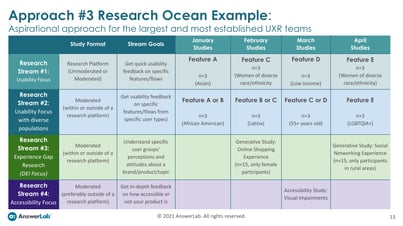

Approach #3 Research Ocean

The Ocean approach is the aspirational approach for large, established teams (7+ years) with a significant budget. This approach works best for teams with a minimum of five researchers.

- Usability (diverse population)

- Usability (any other population, or a different diverse population)

- Experience Gap Research (DEI focus)

- Accessibility

- 25+ usability research studies

- 5+ generative research studies

Timeline Example

See specific timeline examples of what these approaches might look like in action over four months for your team (Download our PDF resource).

UX Research platforms will continue to evolve and improve when it comes to the inclusivity of their panels. However, as it stands today, your team will need to take additional steps to ensure diverse participant populations.

When diverse recruiting is your research priority, and you need the speed of an online platform, we recommend User Interviews due to the diversity of their panel. And because they don't limit the number of screening questions, making it easier for you to control which participants you'd like to recruit.

Download a comparison table and sample research timelines for our recommended approaches.

Learn about Research Operations by AnswerLab.

David Muñoz

David Muñoz, a member of our AnswerLab Alumni, worked with us as a Research Manager during his time at AnswerLab. He has led research efforts for in-house SaaS and mobile product teams in the technology, financial, and nonprofit sectors. David holds a B.S. in Psychology from Duke University and an M.S. in Human-Computer Interaction from Georgia Tech. David may not work with us any longer, but we'll always consider him an AnswerLabber at heart!related insights

Get the insights newsletter

Unlock business growth with insights from our monthly newsletter. Join an exclusive community of UX, CX, and Product leaders who leverage actionable resources to create impactful brand experiences.