Our recent research with smart speaker owners and chatbot users provided a look into what makes a digital assistant succ...

What in the UX is “Wizard of Oz Testing”?

Posted by Chris Geison on Aug 20, 2019

As more clients come to us for our expertise in conversational interfaces and other AI-powered products, we’ve been recommending “Wizard of Oz sessions” more and more frequently. Since Wizard of Oz is a methodology that’s less commonly known, we wanted to dive into what it is, how it works, and why we recommend it when you’re exploring voice products.

What is the Wizard of Oz methodology?

Wizard of Oz (WoZ) is a method where participants interact with a system that they believe to be autonomous, but in reality, is controlled by an unseen human operator in the next room. It's a fantastic way to explore the experience of a complex, responsive system before committing resources and development time to actually build that system.

“But I don’t understand,” said Dorothy, in bewilderment.

“How was it you appeared to me as a great head?”

“That was one of my tricks,” answered Oz. “Step this way, please, and I will tell you all about it.”-L. Frank Baum, The Wonderful Wizard of Oz

How does WoZ work?

Wizard of Oz can be used for virtually any interface but is particularly effective for prototyping AI-driven experiences because the range of system responses are virtually impossible to replicate with traditional prototyping tools and the cost of building a system just to test a concept is prohibitive.

Due to the variety and complexity of AI-driven experiences, and because this topic comes up most frequently around conversational interfaces, I'm focusing this article on using the WoZ method for prototyping voice technology.

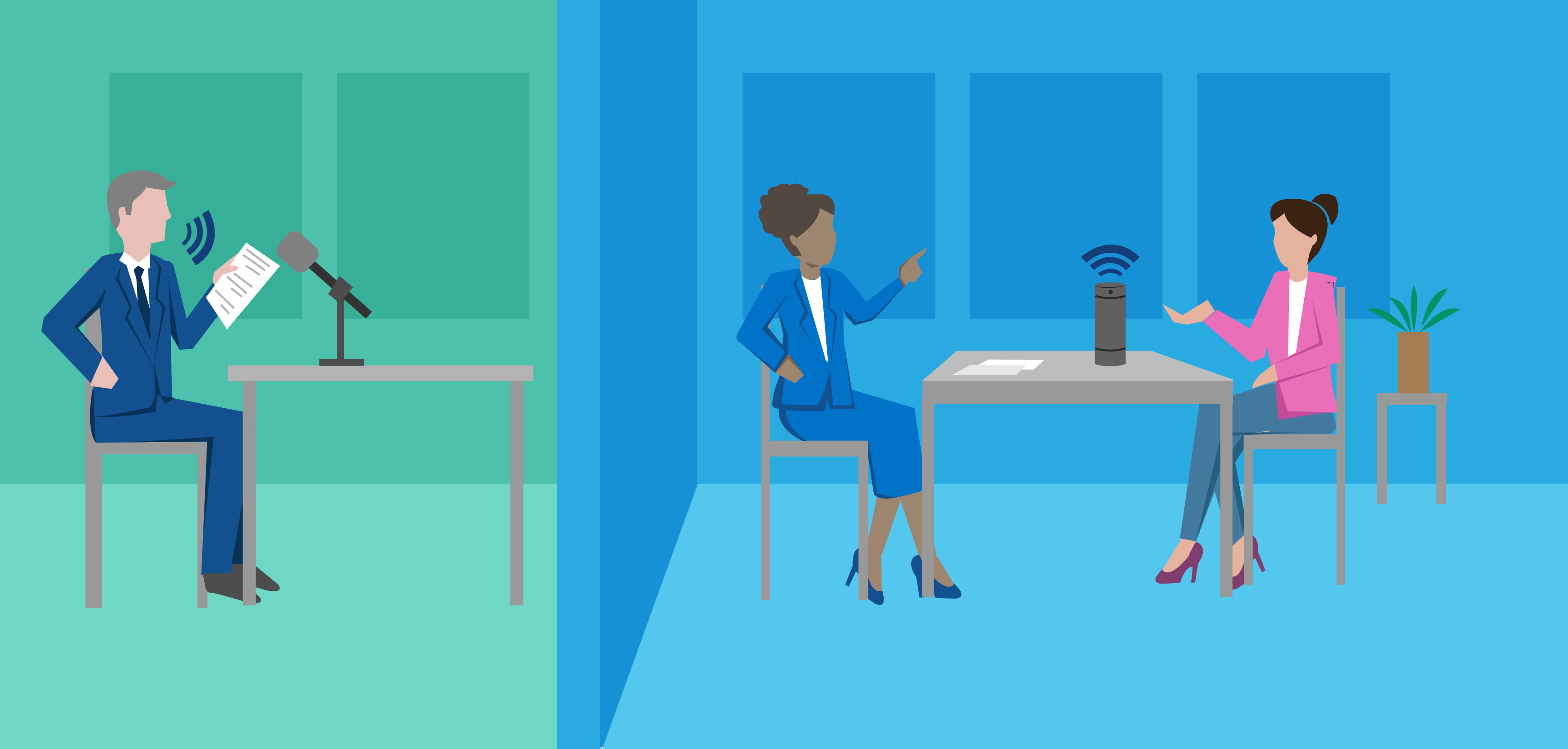

A research session works like this: The UX Researcher is in one room with a participant who’s talking to a device that looks like a smart speaker or voice-enabled product. In another room, another member of the UX research team is controlling the device’s responses. No actual code is involved—just a “man behind the curtain” pulling the levers like the Wizard of Oz.

Prototypes of varying fidelity

As with prototypes for apps or websites, this type of research can be done at varying levels of fidelity, and there are reasons to use each depending on where you are in your product development process and what you’re trying to test.

TL;DR The higher the prototype fidelity, the more expensive it is to produce and the more likely it is to surface insights that are about its usability instead of its utility.

For example, a low fidelity prototype may reveal that participants using a voice commerce app don’t want product reviews read to them by the device, but would instead prefer to have reviews emailed to them to read through later. A high fidelity prototype could refine the process and scripts used to offer product reviews via email to the user.

For this reason, we typically recommend starting with low or medium fidelity prototypes and then moving to high fidelity prototypes once the idea is solidified, but before any code is committed. Let’s take a look at how these work in practice.

Low Fidelity

A low fidelity WoZ prototype might work as follows. In the room with the participant is a tennis ball canister wrapped in felt to represent a smart speaker. Sitting nearby is a phone on ‘speaker mode’ with the “Wizard” (who’s in another room) speaking directly through the phone as if they were the voice assistant. The "Wizard" is using scripted replies to respond to what the participant says. If your voice experience is built for another device type like a mobile phone or clock, a simple representation of that device next to the phone will suffice.

The key benefit of low fidelity WoZ sessions is that they’re easy and inexpensive to run. Simply write some sample dialogs, set up your rooms, and run some tests. Low fidelity is a great way to test concepts and validate your approach before you move too far down a particular path. We’ve had clients come to us with fully developed Alexa Skills they wanted to test in beta only to find that participants wanted different functionality from that brand’s Alexa Skill. Concept testing with low fidelity WoZ prototypes could have saved them time and money.

Another important benefit of using low fidelity prototypes is that users understand it’s an early-stage design and are more likely to provide honest feedback, that could potentially redirect your concept, instead of feedback that improves the concept as designed. This aspect of low fidelity prototypes is one I hope all UX designers take to heart. Whether designing mobile apps or voice interactions, too frequently designers start with prototyping software instead of pencil and paper. As a result, we see user feedback that validates and optimizes the current design, rather than insights that explore users’ mental models and tests concept hypotheses.

Medium Fidelity

A medium fidelity prototype works in almost the same way. Instead of the "Wizard" speaking into a phone, they could connect a Bluetooth speaker in the participant’s room to a computer in the "Wizard’s" room and use a TTS (Text-to-Speech) generator. Text-to-Speech software, as the name suggests, takes in written text and renders it as computer-generated spoken audio. Amazon, Google, Microsoft, IBM, Nuance, and many other companies have TTS systems you can use for this purpose. We won’t dive too deep into specifics here, but there are a range of voices available, from those users know well like Alexa, Google Assistant, and Cortana, to voices they’ve likely never heard before.

In this case, the “Wizard” is taking scripted responses and cut-and-pasting them into the TTS software to be spoken as replies to the research participant. The "Wizard" could also type responses as they go.

The primary advantage of using a TTS to render your voice prototype is that it sounds like the real thing. This makes it easier for participants to respond to the prototype as they would the real experience. Medium fidelity prototypes aren’t significantly more difficult to create than low-fidelity prototypes, and are equally as easy to adjust on the fly. We find they’re an excellent way to refine designs at the conceptual level.

High Fidelity

There are a number of companies providing software to create high fidelity voice prototypes. And at AnswerLab, we can work with whatever software our clients prefer to use when prototyping these experiences. Now that Adobe XD has Alexa integration, allowing you to run your prototype directly on any Alexa-powered device (in addition to computers and mobile phones), we recommend Adobe XD to clients who haven’t decided on a prototyping software. But you can also use others, like Botmock, Voiceflow, and Botsociety Voice, which allow you to run web-based emulators of your voice prototype.

Regardless of which software you use, the point of a high fidelity voice prototype is to present research participants with a complete simulation of your fully fleshed out voice experience. Whereas low and medium fidelity prototypes may direct participants toward specific tasks and flows to test the design’s concept, high fidelity prototypes should allow them to explore the entire experience. We find these prototypes help test the design’s usability from start to finish, rather than high-level concepts and ideas.

Flip the Script

While this isn’t strictly a variation of Wizard of Oz, it deserves a mention. One way of understanding users’ preferences when it comes to digital assistants is to turn the tables, asking the participant to pretend they’re the assistant and the researcher is the user. The researcher will make requests of the system and the participant will respond as they think the digital assistant should respond. This approach isn’t truly a Wizard of Oz method as there’s no one “behind the curtain” operating the system out of sight, but it’s a great way to explore user preferences and mental models. Often, this is extremely revealing and can be used successfully for both early concept testing and for exploring user preferences regarding the assistant’s personality.

...

We may all have conversations daily, but that doesn’t mean we’re experts in designing conversations. Linguists note that humans resolve misunderstandings in spoken communication about once every 90 seconds, and that’s with the benefit of all the non-verbal cues (e.g., facial expressions, gestures, tonality) we have available to us. Voice user interfaces don’t have the same ability to adjust, so VUI designers have to anticipate where misunderstandings might arise and nip them in the bud. The best way to foresee these missteps is through user research. Wizard of Oz sessions allow us to test voice prototypes before committing the time and expense of identifying every decision tree, scripting every turn, and committing it all to code.

It’s not magic, but it can benefit from using a Wizard.

Interested in learning more about testing voice user interfaces especially in remote settings? Watch our webinar recording: Is This Thing On? How to Test Voice Assistants Remotely.

Contact us to talk about your voice strategy.

P.S. - While we’re on the subject of Wizard of Oz, let me just say that the movie The Wiz is better than The Wizard of Oz. Is it cringe-inducingly bad? Maybe. But in addition to a cast that includes Diana Ross, Nipsey Russell, and Lena Horne, the Wizard is played by Richard Pryor. Checkmate.

Chris Geison

Chris Geison, a member of our AnswerLab Alumni, conducted research to help our clients identify and prioritize insights to improve business results. Chris focuses on emerging technologies, including Voice, AI, and connected products, and led a number of primary research initiatives on emerging technology during his time at AnswerLab. Chris may not work with us any longer, but we'll always consider him an AnswerLabber at heart!related insights

Get the insights newsletter

Unlock business growth with insights from our monthly newsletter. Join an exclusive community of UX, CX, and Product leaders who leverage actionable resources to create impactful brand experiences.